Here’s a neat little feature I wasn’t aware of until recently. You can make a .NET Dictionary object case-insensitive. i.e. myDictionary[“ABC”] returns the same as myDictionary[“aBc”]. You can read more at:

Thursday, September 1, 2011

Wednesday, August 31, 2011

Returning Multiple Disparate Result Sets as XML From SQL Server Using FOR XML EXPLICIT

<orderformdata> <orders> <order> <orderdate>1/1/2011</orderdate> <ordertypeid>1</ordertypeid> <orderamount>1000.00</orderamount> </order> <order> <orderdate>1/1/2011</orderdate> <ordertypeid>1</ordertypeid> <orderamount>1000.00</orderamount> </order> </orders> <references> <ordertypes> <ordertype id="1" name="Professional Services"/> <ordertype id="2" name="Product"/> <ordertype id="3" name="Support Contract"/> </ordertypes> </references> </orderformdata>

Using SQL Servers FOR XML clause, it’s quite easy to output a result set as XML. With the EXPLICIT directive you can have a great amount of control over how the XML is rendered. However, the examples tend to show how to create XML from a single result set or nested records (say orders and order details). So how to return the above?

Monday, August 22, 2011

Fun with WCF, WSDL & the F5 (Updated)

So this week we pushed out an early iteration of the latest version of our integration tool to a customer as a proof-of-concept for evaluation. One of the features they were most interested in evaluating is the web service interface. Our current customers interact with the existing version of the integration tool through FTP and a UI.

Although the backend functionality is fairly stable, the web service layer itself is not that mature. Not too big a deal as it’s a fairly thin wrapper over our existing service layer. We created, tested and delivered a test .NET client that seemed to work fine and so were reasonably confident that there wouldn’t be too many hiccups.

Not so much. We spent the better part of the day simply trying to get their tool to retrieve and understand our WSDL. The good news is that we finally got it to work.

Tuesday, August 16, 2011

Setting Up a Test Reverse Proxy/SSL Accelerator Environment (or for that matter, a budget production one)

Given that, according to our operations people, BIG-IP boxes start at 5 figures and rapidly go up, they seemed unwilling to purchase one for me. Additionally, for security issues, and to remain in compliance with our SAS-70, I don’t have access to our production environment for testing.

Tuesday, July 26, 2011

Execute-Remotely

One of the really powerful features of PowerShell is the ability to run commands remotely. I used this when I wanted to loop through my test servers from the build machine and run my MSI installs.

The following script loops through an array of machines and returns a list of .log files under the C:\Windows folder. One thing to note. The remote session doesn't have access to any of the local script variables, so we pass them as parameters using the -Args argument of the Invoke-Command cmdlet, receiving them using the param statement inside the remote script block.

$AgentServers = @("MYSERVER1", "MYSERVER2");

ForEach ($AgentServer in $AgentServers) {

$Session = New-PSSession -ComputerName $AgentServer;

$FilePattern = "*.log";

$Arguments = $FilePattern

Invoke-Command -Session $Session -Args $Arguments -Script {

param($FilePattern)

Set-Location "C:\Windows"

Get-ChildItem -Include $FilePattern -Recurse

}

Remove-PSSession -Session $Session

}

Now the first time you run the remote script above, it may well fail. Why? Because you forgot to enable remoting on the target machine. On each machine you want to run remote sessions on you’ll have to run:

Enable-PSRemoting

Note that you’ll have to start PowerShell as administrator to perform this.

Also take note that we’re killing each session using Remove-PSSession when we’re done with it as there is a 5 session limit on each remote server and it’s pretty easy to hit that if you forget to close out prior ones.

On that note, there’s a quick trick on clearing out all those orphaned sessions:

Get-PSSession | Remove-PSSession

Get-PSSession will return a list of all open session objects, piping them to Remove-PSSession which subsequently closes them out.

Monday, July 25, 2011

Run-Script

As I mentioned in an earlier post, just like the DOS command line has batch files, PowerShell can run PowerShell script files. These have the .PS1 extension.

So go ahead, throw a bunch of PowerShell commands together into a .PS1 file in NotePad and save it. Next, navigate to the folder you saved it to and double-click on it. Awesome, you just ran your first PowerShell script! What do you mean it didn’t run? It came up in NotePad?

Oh yeah. By default, for security reasons, double-clicking on a PowerShell script doesn’t run it. To do that you have a couple of options. First is to open up PowerShell and run the script from PowerShell's command line. To do that you simply type:

C:\MyScripts\MyCoolScript.ps1

If your current folder is already C:\MyScripts you’ll type:

.\MyCoolScript.ps1

Note that we prefaced the script file with “.\”. Without that, PowerShell thinks it’s a built-in command and yells that it doesn’t recognize it.

OK, you’ve typed it in, hit enter and away it goes, no? Except that all you see is:

File C:\MyScripts\MyCoolScript.ps1 cannot be loaded because the execution of scripts is disabled on this system. Please see "get-help about_signing" for more details.

At line:1 char:11

+ .\test.ps1 <<<<

+ CategoryInfo : NotSpecified: (:) [], PSSecurityException

+ FullyQualifiedErrorId : RuntimeException

$%!#$%!^&*#…OK, ok, breath…again…deep breath…Yet another security “feature”. With this one type at the command line:

Set-ExecutionPolicy RemoteSigned

Or

Set-ExecutionPolicy Unrestricted

You only need to run this once and it will allow you to run scripts. When setting RemoteSigned, any local scripts will run as-is, but remote scripts will need to be digitally signed. Like Enable-PSRemoting, you’ll need to open PowerShell as administrator.

But I’m not an administrator! The execution policy is not a true security setting, it’s simply there to help prevent the inadvertent running of malicious scripts. You can actually set the execution policy when you open PowerShell from the command line by using the –ExecutionPolicy argument, using the same RemoteSigned or Unrestricted value. This will only set the execution policy for that session.

Note that when using the Set-ExectuionPolicy you can set the scope of the setting using the –Scope argument to be either the current process (-Scope Process, same as setting it on the command line), current User (-Scope CurrentUser) or the local machine (-Scope LocalMachine). The default value is LocalMachine.

Sunday, July 24, 2011

Run-Executable

OK, so one of the promises of PowerShell is that not only does it do all this cool new stuff, but all your favorite DOS commands are aliases of the new PowerShell commands, but that you can also run your old executables just like you used to….

Well not so fast….

Running a straight executable such as MyExe.exe, works just fine as long as you’re in the exe’s folder or it resides in a folder in the PATH environment variable.

Try passing to start passing it command line arguments and things start to get squirly.

This is due to how PowerShell handles command line arguments. PowerShell has three types of arguments:

- Positional – these arguments are expect to be in a specific position such as:

- Run-Command “Param1Value”

- Named – these arguments are proceeded by the parameter name and can be in any position as follows (note that I’m passing a string literal to Param2 and a variable value to Param1:

- Run-Command –Param2 “Param2Value” –Param1 $Param1Value

- Switch Parameters – These parameters are either bolean parameters that are either present or not such as:

- Run-Command –SwitchedOn

PowerShell tries to treat executable paramters the same way. This works great if your executable uses the same format, but if you’re trying to run MsiExec you’ve got a problem.

MsiExec /i MyInstall.msiWill work fine, but try:

$MyCustomInstallFolder = “D:\Custom Program Files”

MsiExec /I MyInstall.msi TARGETDIR=$MyCustomInstallFolder

Not so much. the problem is is that what is actually getting passed is:

MsiExec /i MyInstall.msi “TARGETDIR=D:\Custom Program Files”MsiExec ends up ignoring the TARGETDIR parameter because PowerShell didn’t recognize TARGETDIR as a named parameter and treated it as a positional parameter, surrounding it with quotes because the expanded string contained spaces.

After fighting with this for quite some time (and doing a fair amount of Googling), I ended up writing the following function that utilizes the .NET Process object to execute an executable, passing a string variable for the entire command line.

Function Start-Process

{

Param([string]$Executable,

[string]$Arguments,

[string]$WorkingFolder,

[int]$Timeout = 240000,

[switch]$ShowStandardOutput)

Write-Host ("Starting Process, {0}" -F $Executable);

Write-Host ("Command Line Args: {0}" -F $Arguments);

Write-Host ("Working Folder: " -F $WorkingFolder);

Write-Host ("Timeout: {0}" -F $TimeOut);

Write-Host ("Show Std Out: {0}" -F $ShowStandardOutput);

$ProcessInfo = New-Object -TypeName System.Diagnostics.ProcessStartInfo;

$ProcessInfo.FileName = $Executable;

$ProcessInfo.WorkingDirectory = $WorkingFolder

$ProcessInfo.UseShellExecute = $false;

if ($ShowStandardOutput) {

$ProcessInfo.RedirectStandardOutput = $true;

}

else {

$ProcessInfo.RedirectStandardOutput = $false;

}

$ProcessInfo.RedirectStandardError = $false;

$ProcessInfo.Arguments = $Arguments;

$ProcessInfo.CreateNoWindow = $false;

$ProcessInfo.ErrorDialog = $false;

$Process = New-Object -TypeName System.Diagnostics.Process;

$Process.StartInfo = $ProcessInfo;

$Process.EnableRaisingEvents = $false;

$Process.Start();

if ($ShowSTandardOutput) {

$Output = $Process.StandardOutput.ReadToEnd();

$Output;

}

if (-not $Process.WaitForExit($Timeout)) {

$Process.Kill;

$ProcessInfo;

throw "Start-Process - Process timed out";

}

if ($Process.ExitCode -ne 0) {

$ProcessInfo;

throw "Start-Process - Process failed with exit code - " + $Process.ExitCode

};

}

Since then I’ve read that you can actually run Cmd.exe, passing the entire command line (executable and arguments) as a string, thus doing something similar to what I’m doing with the Process object.

Saturday, July 23, 2011

Control-Flow

PowerShell provides a comprehensive set of flow-control constructs, starting with if/elseif/else as follows:

$Value = 2

If ($Value -eq 1) {

Do-Something

}

ElseIf ($Value -eq 2) {

Do-SomethingElse

}

Else {

Do-SomethingCompletelyDifferent

}

Note that we have a completely different set of comparison and logical operators to remember as follows:

| Equals | -eq |

| Not Equal To | -ne |

| Greater Than | -gt |

| Greater Than or Equal To | -ge |

| Less Than | -lt |

| Less Than or Equal To | -le |

| Not | -not or ! |

| And | -and |

| Or | -or |

Additionally, if you're doing string comparison, you can force case-sensitive or case-insensitive (default comparison is case-insensitive) by prefacing the operator with a c or i e.g. -ceq is a case-sensitive equal comparison.

In addition to the if flow control, PowerShell also has:

- Do While - Do {Code Block} While (Condition)

- While - While (Condition) {Code Block}

- Do Until -

- For - For ($Index = 1; $Index -le 3; $Index++) {Code Block}

- ForEach - ForEach ($MyObject In $MyObjectsArrayOrCollection) {Code Block}

- Switch -

Switch ($MyValue)

{

Result1 {Code Block}

Result2 {Code Block}

Default {Code Block}

}

A couple of good articles on flow control are:

Friday, July 22, 2011

Use-Variable

I am pretty light on the details of variables, particularly around scope, expansion and built-in variables, but PowerShell does have them. Variables are always prefaced with “$”. Declaration and assignment of variables is as simple as:

$Number = 2

Or

$Files = Get-ChildItem

In the first case, we’re assigning the number 2 to the variable $Number. In the second we’re assigning $Files an array of files in the current folder. Remember that depending on the provider this may not be files. In the case of:

SQL:\MYSQLSERVER\DEFAULT\Databases\MyDatabase\Tableswe’d be assigning an array of SMO table objects in the MyDatabase database.

I’m a little shaky on variable expansion. For example:

$Subfolder = “MySubFolder”

Set-Location C:\MyFolder\$Subfolder

will set your current location to C:\MyFolder\MySubFolder. If you have space in your path you could type:

Set-Location “C:\My Folder\$Subfolder”

and the value of $Subfolder will replace $Subfolder in the path. However, I seem to have had cases where the string replacement doesn’t happen and I simply end up with a string “C:\My Folder\$Subfolder”

When in doubt, you can look to string concatenation as follows:

$Subfolder = “MySubFolder”

$Folder = “C:\MyFolder\” + $Subfolder

Or PowerShell’s equivalent of the .NET string.Format(string, arg1, arg2, …) as follows:

Set-Location (“C:\My Folder\{0}” –F $Subfolder)

Although you don't see it often, you can type variables by declaring them with a specific .NET type as follows:

[int]$MyInteger = 1

This will explicitly type the variable as an integer. There are two special types of variables and literal assignments, arrays and hastables. You can define and assign a literal array as follows:

$MyArray = @(1, 2, 3)

$MyValue = $MyArray[1]

This creates a 3 integer, zero-indexed array and then assign the second value, 2 to the variable $MyValue. A hash table variable, or dictionary, creates a list of name/value pairs as follows:

$MyHashTable = @{"Value1" = 123, "Value2" = 456}

$MySecondValue = $MyHashTable["Value2"]

You can add a new value by simply assigning a value to an unused name as follows:

$MyHasTable["Value3"] = 789

Or remove a value using the .Remove method as follows:

$MyHashTable.Remove("Value2")

Don't forget you can do all of this interactively from the command line and viewing the value of the variable is as simple as typing the variable name at the command line and hitting enter.

As noted at the beginning, I’m still a little light on the details of variables. I believe there are a number of scope rules around access to variables inside modules etc. that I am not familiar with so Google will be your friend with this.

A good article I found on variables is PowerShell Tutorial 7: Accumulate, Recall, and Modify Data.

Thursday, July 21, 2011

Process-Pipeline

PowerShell allows you to pipe the results of one command into the next. It doesn’t simply pass along the text output but sends the entire resulting .NET object. This may be an array or IEnumerable collection of objects.

For example, Get-ChildItem *.txt will return a list of files in the current folder. However what it’s actually returning is a list of System.IO.FileInfo objects. Typing Get-ChildItem *.txt | Remove-Item will cause it to pass along that list of objects to the Remove-Item cmdlet which will subsequently delete them.

That’s not so interesting because you could have simply typed Remove-Item *.txt (or Del *.txt). But what if you wanted to delete only text files larger than 10KB? Try this:

Get-ChildItem *.txt | Where-Object {$_.Length -gt 10000} | Remove-itemSo what’s going on here? First we’re taking the output of the Get-ChildItem *.txt cmdlet which returns an array of System.IO.FileInfo objects representing all of the text files and passing it to the Where-Object. One of the possible arguments for the Where-Object (aliased as Where) is a script block.

The script block here is {$_.Length > 10000}. What’s going on here is that the Where-Object cmdlet is passing each input object (i.e. each FileInfo object) into the script block. The passed in object is represented in the script block as “$_”. We’re then testing to see whether it’s Length property is greater than 10000 using the –gt greater than operator and returning only those that evaluate to true. (Yes, PowerShell doesn’t use the standard operator symbols we’re used to but that’s another blog entry).

Next along we’re passing along the resulting text files bigger than 10KB to Remove-Item and deleting them. Note that since we’re dealing with standard .NET FileInfo objects we could have used any of it’s properties such as LastAccessTime or Attributes.

Wednesday, July 20, 2011

WhatIs-PowerShell -?

PowerShell is Microsoft’s scripting alternative to ye olde DOS command line. Although it shares many of it’s concepts, it it immensely more flexible and extensible.

It’s primary purpose is to allow system admins to accomplish more through a command line interface and scripts using a more standard command syntax than the collection of DOS commands, VBScripts and random executables that are available with the standard cmd.exe interface.

At it’s base it’s an interactive shell that allows you to type commands and view the results. Like the DOS command line, you can create script files (.PS1 instead of .BAT). Unlike the DOS command line you can load extensions in the form of Snap-ins and Modules (Snap-Ins are a version 1 concept that can still be loaded but have largely been replaced by Modules in version 2).

CmdLets & Providers

There are two important concepts in PowerShell, CmdLets and Providers.

CmdLets are commands you type at the command line such as Set-Location and Get-ChildItem. Note that these can be aliased. You can type Del *.txt and it will delete all text files in the current folder because Del is an alias of Remove-Item.

You’ll notice that the non-aliased naming convention for CmdLets is Verb-Object. Not only that, but if you define a CmdLet or function that doesn’t use one of the pre-defined verbs, PowerShell will yell at you, although it will still function correctly (You can get a list of approved verbs by typing Get-Verb).

Providers on the other hand allow you to navigate systems through a folder system. The most obvious is the file system. The File System provider allows you to type CD C:\Users (translates to Set-Location C:\Users) which will set your current location to the C:\Users folder.

However, unlike the DOS command line, we’re no longer limited to the file system. Load up the SQL Server provider, type CD SQL:\MYSQLSERVER\DEFAULT\Databases\MyDatabase\Tables, type Dir (translates to Get-ChildItem) and you’ll get a list of the tables in the MyDatabase database on the default SQL instance on MYSSQLSERVER. there are also providers for the registry and IIS, allowing for easy navigation.

Tuesday, July 19, 2011

Hello-PowerShell

I considered MSIs, batch files, .NET apps, and a combination of the above with various command line utilities. Ultimately, I decided that it was finally time to learn PowerShell.

After a headache inducing introduction, I’ve become quite comfortable with and somewhat enamored of it, for certain, sysadmin types of tasks, to the point that I’ve written initial versions of PowerShell modules in C# for the application to help simplify the automation/scripting of setting up integration configurations. Although I didn’t completely get away from MSIs and command line utilities, PowerShell did eliminate the need for some and was able to tie the rest together much more nicely than would be possible with the old batch file.

I am by no means yet an expert, and based on some articles I've scanned I've barely scratched the surface, but over the next few blog entries will share some of the pleasure and pain I’ve experienced in working with it.

A couple of things that will greatly help you to start with. First is Get-Help. typing this followed by any cmdlet name will get you a brief description of the cmdlet, it’s syntax, list of arguments and related cmdlets. Adding the –detailed argument to the Get-Help cmdlet will return detailed information on each of the arguments as well as examples.

If you don’t quite remember the name of the cmdlet, you can type in part of it and Get-Help will return a list of cmdlets containing that string. e.g. searching on “Session” will return all of the session cmdlets. Helpful when you forget that Get-PSSession has a “PS” in it.

Of course the next thing that will help is Google, but if you want to skip that there’s a couple sites that Google has taken me to several times:

- PowerShell Pro (http://www.powershellpro.com/)

- PowerShell ABC's (http://devcentral.f5.com/weblogs/Joe/Tags/PowerShell%20ABCs/default.aspx) - 26 very useful articles by Joe Pruit.

- PowerShell.com (http://powershell.com/cs/)

- PowerShell Intro on MSDN (http://msdn.microsoft.com/en-us/library/aa973757(v=vs.85).aspx)

- Windows PowerShell Blog (http://blogs.msdn.com/b/powershell/)

Thursday, April 28, 2011

QUOTED_IDENTIFIER, Filtered Indexes, SSMS & SQLCMD

So I’ve been working on a project that utilizes filtered indexes and decided I needed to build an automated deployment routine. I had built a set of build scripts, and utilizing SQLCMD mode in SSMS to allow for include files, built a master script to perform the entire build.

During development and initial testing I ran this in SSMS and everything worked fine, but for automation purposes I used SQLCMD.EXE. As soon as I started using SQLCMD Is started to get errors indicating that the QUOTED_IDENTIFIER was incorrectly set for indexed views, filtered indexes etc.

Now I’m aware that indexed views among other objects are picky about their settings and the error message let me know that this was the case for filtered indexes as well. However, the question was, why hadn’t it come up before? In looking through the code I only found one reference where QUOTED_IDENTIFIER was set to OFF and that was in the create database script. Even after changing that the errors were still showing up.

It turns out that by default, a connection through SSMS sets QUOTED_IDENTIFIER to ON, while a connection through SQLCMD sets it to OFF. In SQLCMD it does this after each connect statement, so setting it at the beginning of a script may not cover it if you subsequently run a connect statement after it. The best practice is to run the set statements after each connect.

Reading through the Remarks section of the following MSDN article, SET QUOTED_IDENTIFIER (Transact-SQL), states that the defaults are set differently for different connection types (DB-Library, SQL Server Native Client, ODBC etc.) and thus indicates that SQLCMD and SSMS are probably using different connection types.

Tuesday, April 5, 2011

Debunking the Cult of the Machine SID

So this isn’t especially data oriented, but for any developer who is constantly creating new VMs for development or testing this info might come as a pleasant surprise.

Over the years, when cloning VMs, sometimes by simply copying the VHD files and attaching them to a new machine, we’ve been told that we’ll cause problems because we aren’t creating machines with unique SIDs. As a result, we’ve had to use sysprep, sysinternals NewSID (discontinued) or the VM software of our choice’s cloning tool. Turns out however, our obsession with SIDs was all for nought. The following technet blog post by the author NewSID details why duplicate SIDs don’t matter:

The Machine SID Duplication Myth (and Why Sysprep Matters)

Note that it is still official Microsoft policy to require cloned machines to be syspreped, and as such I would recommend following that policy in a production environment, but for us developers, copy away and stop worrying about your SIDs!

Wednesday, March 23, 2011

SQL’s Choice–Helping SQL Server Pick Deadlock Victims

In my last post I show how to debug deadlocks using SQL Profiler. That’ll help you figure out what’s causing them. But what if for whatever reason you can’t resolve all of them? How do I make sure that a high priority transaction isn’t chosen as the victim?

SQL provides the answer with the SET DEADLOCK_PRIORITY statement. You can pass it a value between –10 and 10 or the predefined values, HIGH, NORMAL or LOW that map to –5, 0 and 5 respectively. A session with a lower value will picked as a deadlock victim over a session with a higher value.

Below are the table and stored procedure scripts from the previous article that will allow us to create a deadlock by executing ProcedureA in one SSMS window and then immediately executing ProcedureB in a second window.

-- Create Tables

CREATE TABLE TableA(

TableAId INT IDENTITY(1,1) NOT NULL,

SomeValue NVARCHAR(50) NOT NULL,

)

GO

CREATE TABLE TableB(

TableBId INT IDENTITY(1,1) NOT NULL,

SomeOtherValue NVARCHAR(50) NOT NULL,

)

GO

-- Create Stored Procedures

CREATE PROCEDURE ProcedureA

AS

BEGIN

SET DEADLOCK_PRIORITY HIGH

BEGIN TRANSACTION

INSERT INTO TableA WITH (TABLOCKX) (

SomeValue)

VALUES (

'SomeValue A')

WAITFOR DELAY '00:00:10'

INSERT INTO TableB WITH (TABLOCKX) (

SomeOtherValue)

VALUES (

'SomeOther Value A')

COMMIT TRANSACTION

END

GO

CREATE PROCEDURE ProcedureB

AS

BEGIN

SET DEADLOCK_PRIORITY LOW

BEGIN TRANSACTION

INSERT INTO TableB WITH (TABLOCKX) (

SomeOtherValue)

VALUES (

'SomeValue B')

INSERT INTO TableA WITH (TABLOCKX) (

SomeValue)

VALUES (

'SomeOther Value B')

COMMIT TRANSACTION

END

GO

Note that the difference here is that the first statement in ProcedureA is SET DEADLOCK_PRIORITY HIGH and in ProcedureB is SET DEADLOCK_PRIORITY LOW. This will ensure that ProcedureB will always be the victim of a deadlock between Procedures A & B.

By placing the SET statement within the stored procedure we’ve set the deadlock priority for the stored procedure alone. If it’s run outside the stored procedure it can be used to set the priority of an entire session.

If your interested in finding out what the current priority is you can use the following query:

SELECT

session_id,

deadlock_priority

FROM sys.dm_exec_sessions

WHERE session_id = @@SPID

So now even if you can’t prevent all deadlocks, at least you can give your mission critical processes priority.

For more information on DEADLOCK_PRIORITY you can read on MSDN at:

Friday, March 18, 2011

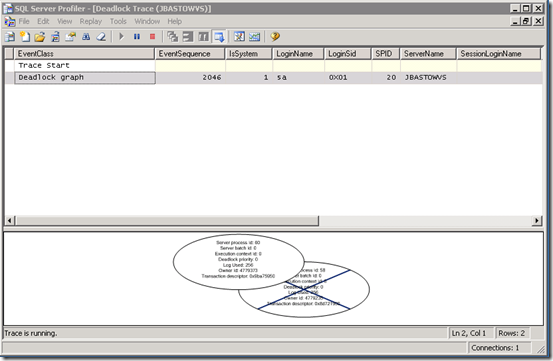

Debugging Deadlocks with SQL Profiler

Don’t you love this:

Transaction (Process ID 60) was deadlocked on lock resources with another process and has been chosen as the deadlock victim. Rerun the transaction.

SQL helpfully tells you that your process was chosen as the deadlock victim but doesn’t tell you who “another process” was and what the deadlock was on? Certainly makes debugging fun and exciting. It turns out that SQL Profiler has some nice functionality for just this purpose.

First let’s find a way to reliably reproduce a deadlock. Let’s create a couple of tables using the following script:

CREATE TABLE TableA(

TableAId INT IDENTITY(1,1) NOT NULL,

SomeValue NVARCHAR(50) NOT NULL,

)

GO

CREATE TABLE TableB(

TableBId INT IDENTITY(1,1) NOT NULL,

SomeOtherValue NVARCHAR(50) NOT NULL,

)

GO

Now lets create the following tow stored procedures:

CREATE PROCEDURE ProcedureA

AS

BEGIN

BEGIN TRANSACTION

INSERT INTO TableA WITH (TABLOCKX) (

SomeValue)

VALUES (

'SomeValue A')

WAITFOR DELAY '00:00:10'

INSERT INTO TableB WITH (TABLOCKX) (

SomeOtherValue)

VALUES (

'SomeOther Value A')

COMMIT TRANSACTION

END

GO

CREATE PROCEDURE ProcedureB

AS

BEGIN

BEGIN TRANSACTION

INSERT INTO TableB WITH (TABLOCKX) (

SomeOtherValue)

VALUES (

'SomeValue B')

INSERT INTO TableA WITH (TABLOCKX) (

SomeValue)

VALUES (

'SomeOther Value B')

COMMIT TRANSACTION

END

GO

Note that I’ve added a WAITFOR statement between the first and second INSERT statements in ProcedureA. This will allow us to start ProcedureA in one window and give us enough time to start ProcedureB and create a deadlock in a second window. Go ahead and give it a try. One of them will be chosen as the deadlock victim.

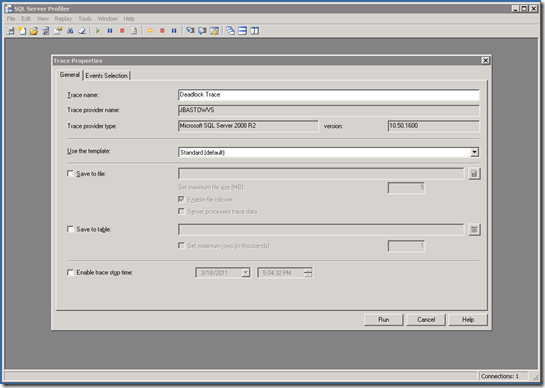

OK. Now that we can create one ourselves, how do we go about debugging it? First, open up SQL Profiler. You’ll get to the initial window to define your trace:

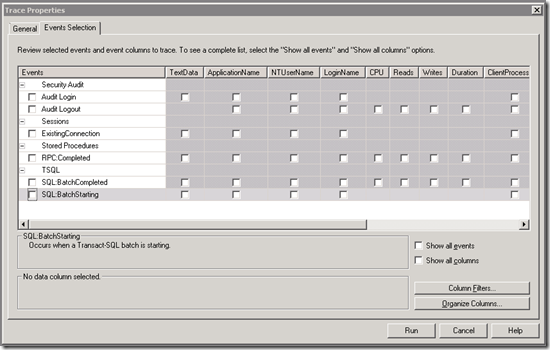

I’ve renamed mine Deadlock Trace. Probably would have left it untitled if it hadn’t been for a blog entry. Next go to the Event Selection tab and remove all of the existing selections as follows:

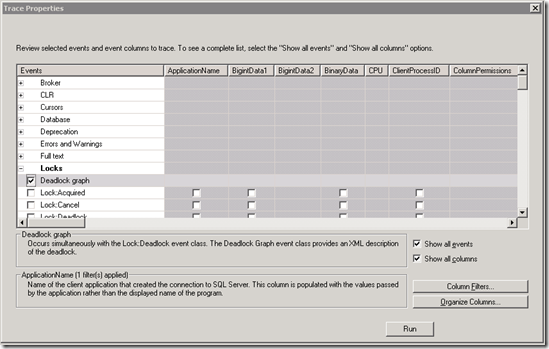

Once you have a clean slate, click on the Show all events and Show all columns checkboxes down on the lower right. Now you can pick the deadlock graph event from under the Locks group as follows:

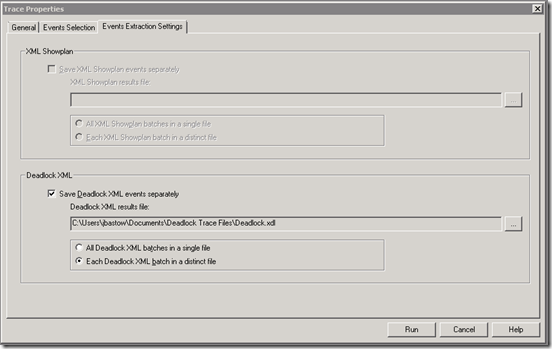

When you do this, you’ll notice that there’s now a third tab, Events Extraction Settings. Navigate to this tab and you’ll be able to specify that profiler should write the deadlock information to a file and where as follows:

Note that I’ve chosen to have it write each deadlock to its own file. I’ve found that to be much more convenient when reading them. Now hit Run and off it goes. Go back to SSMS and run our test procedures again to produce another deadlock. When that’s completed, switch back to Profiler and you’ll see the following:

There you go. Debug away. OK, it’s not showing much more than we got before. We know there were two processes, they deadlocked and one was killed. Time to navigate explorer to the folder we told it to save the deadlocks in. You should see a deadlock trace file as follows:

Now if you simply double-click on it it will open up in Profiler with the same graphical view we got above. Since it’s just an XML file, open it up in the text/XML editor of your choice. This file contains a wealth of information to help you resolve deadlock issues. It’s a little large so I’m not going to include it the whole thing here but here’s some key parts:

<deadlock-list>

<deadlock victim="process4ad288">

<process-list>

<process id="process4ad288" clientoption2="390200" clientoption1="671090784" locktimeout="4294967295" currentdb="11" xactid="4791721" isolationlevel="read committed (2)" loginname="SILKROADTECH\jbastow" hostpid="6456" hostname="JBASTOWVS" clientapp="Microsoft SQL Server Management Studio - Query" lastbatchcompleted="2011-03-18T16:42:10.440" lastbatchstarted="2011-03-18T16:48:44.960" trancount="2" priority="0" ecid="0" sbid="0" spid="58" status="suspended" kpid="7072" schedulerid="4" lockmode="X" xdes="0x800ff950" lasttranstarted="2011-03-18T16:48:44.960" transactionname="user_transaction" ownerid="4791721" waittime="2577" waitresource="OBJECT: 11:1893581784:0 " logused="256" taskpriority="0">

<executionstack>

<frame sqlhandle="0x03000b00f4320d6d38a90201aa9e00000100000000000000" stmtend="568" stmtstart="368" line="13" procname="PlayPen.dbo.ProcedureA">

INSERT INTO TableB WITH (TABLOCKX) (

SomeOtherValue)

VALUES (

&apos;SomeOther Value A&apos;) </frame>

At the top you’ll note it specifies the specific process that was the victim. Below that is a process list that shows the details of each process involved in the deadlock, including the stored procedure, if appropriate. It even shows which statements within the transaction are deadlocked.

After the process list node you’ll see the resource list node. this lists each of the objects (tables, indexes, etc.) that are locked as part of the deadlock, and the type of lock each process has, or is attempting to acquire.

<resource-list>

<objectlock lockPartition="0" objid="1893581784" subresource="FULL" dbid="11" objectname="PlayPen.dbo.TableB" id="lock80022c80" mode="X" associatedObjectId="1893581784">

<owner-list>

<owner id="process493708" mode="X"/>

</owner-list>

<waiter-list>

<waiter id="process4ad288" mode="X" requestType="wait"/>

</waiter-list>

</objectlock>

OK. Now that you know how to diagnose your deadlocks, get going fixing them!

You can read more on MSDN in:

Tuesday, February 15, 2011

SQL Server & Windows Firewall

If you’re installing SQL Server on a machine that has Windows Firewall enabled, you’ll have to configure the firewall to open the correct ports. For a default instance, that’s TCP 1433 for the database engine and UDP 1434 for the SQL Browser (used by client applications to retrieve the dynamic port of a non-default instance of SQL). The following MSDN article details what needs to be configured:

Configuring the Windows Firewall to Allow SQL Server Access

(http://msdn.microsoft.com/en-us/library/cc646023(SQL.100).aspx)

Friday, February 4, 2011

Filtered Indexes Part 3: Data Archiving

In the past, I’ve often had to keep large amounts of transactional and/or inactive data around for historical reporting purposes (or maybe just because I’m a data pack rat by nature). Keeping this in its original table can make querying current data rather costly, so often the approach has been to move it to an archive table or even archive database.

This of course makes querying a bit more complex and requires the management of archiving the data itself. Filtered indexes give us an option to have many of the performance advantages of archiving the data while leaving it in place.

Let’s consider the following scenario. We have a customers table and orders table. It’s been determined that most of the time we will be querying on open orders, where the order table’s CompletedOn field is null. The following script will build the two tables:

-- Create Customers table

CREATE TABLE tblCustomers (

CustomerId INT NOT NULL IDENTITY(1, 1)

,CustomerName NVARCHAR(255) NOT NULL

CONSTRAINT PK_tblCustomers PRIMARY KEY CLUSTERED

(

CustomerId ASC

)

)

GO

-- Create Orders table

CREATE TABLE tblOrders (

OrderId INT NOT NULL IDENTITY(1, 1)

,CustomerId INT NOT NULL

,OrderDate DATETIME NOT NULL

,OrderAmount MONEY NOT NULL

,CompletedOn DATETIME NULL

CONSTRAINT PK_tblOrders PRIMARY KEY NONCLUSTERED

(

OrderId ASC

)

)

GO

-- Add foreign key constraint for CustomerId

ALTER TABLE tblOrders

WITH CHECK ADD CONSTRAINT FK_tblOrders_tblCustomers

FOREIGN KEY(CustomerId)

REFERENCES tblCustomers (CustomerId)

GO

The script below will create some test data. Depending on your machine you may want to tweak some of the parameters. As setup it will create ~100,000 customer records and randomly create between 0 and 100 order records per customer (~5,000,000 records in total).

-- Create test customers

DECLARE @CustomersTemp TABLE (

CustomerName NVARCHAR(255))

DECLARE @RecordCount INT

SET @RecordCount = 0

WHILE @RecordCount < 100000

BEGIN

INSERT INTO @CustomersTemp (

CustomerName)

VALUES (

'Customer ' + CAST(@RecordCount AS NVARCHAR))

SET @RecordCount = @RecordCount + 1

END

INSERT INTO tblCustomers (

CustomerName)

SELECT

CustomerName

FROM @CustomersTemp

-- Create test orders

DECLARE @OrdersTemp TABLE(

CustomerId INT

,OrderDate DATETIME

,OrderAmount MONEY)

DECLARE @CustomerId INT;

DECLARE @OrderCount INT

DECLARE Customers CURSOR

FOR

SELECT

CustomerId

FROM tblCustomers;

OPEN Customers;

FETCH NEXT FROM Customers

INTO @CustomerId;

WHILE @@FETCH_STATUS = 0

BEGIN

SET @RecordCount = 0;

SET @OrderCount = CAST((RAND() * 100.0) AS INT);

WHILE @RecordCount < @OrderCount

BEGIN

INSERT INTO @OrdersTemp (

CustomerId

,OrderDate

,OrderAmount)

VALUES (

@CustomerId

,DATEADD(DAY, RAND() * 3650, '1/1/2000')

,(RAND() * 1000))

SET @RecordCount = @RecordCount + 1

END

FETCH NEXT FROM Customers

INTO @CustomerId;

END

INSERT INTO tblOrders (

CustomerId

,OrderDate

,OrderAmount)

SELECT

CustomerId

,OrderDate

,OrderAmount

FROM @OrdersTemp

CLOSE Customers;

DEALLOCATE Customers;

GO

-- Mark orders completed.

UPDATE tblOrders SET

CompletedOn = GETDATE()

WHERE OrderDate < '1/1/2009'

GO

Note that the last part of the script will mark about 90% of the orders as having been completed.

Now for a query. The query below is getting the record count for all uncompleted orders greater than $500.

SELECT

COUNT(*)

FROM tblOrders

INNER JOIN tblCustomers

ON tblOrders.CustomerId = tblCustomers.CustomerId

WHERE CompletedOn IS NULL

AND OrderAmount > 500

At this point we haven’t defined any indexes other than the primary keys of the tables. On my development server the query at this point the query is taking about 120ms.

Adding the unfiltered index below reduces that time to ~57ms.

-- Add index on OrderAmount

--DROP INDEX IX_tblOrders_OrderAmount ON tblOrders

GO

CREATE NONCLUSTERED INDEX IX_tblOrders_OrderAmount

ON tblOrders (

OrderAmount ASC

,CompletedOn ASC

)

GO

Finally, adding the filtered index below reduces the time to ~34ms.

-- Add filtered index on OrderAmount

--DROP INDEX IX_tblOrders_OrderAmount_Current ON tblOrders

GO

CREATE NONCLUSTERED INDEX IX_tblOrders_OrderAmount_Current

ON tblOrders (

OrderAmount ASC

)

INCLUDE (CompletedOn)

WHERE CompletedOn IS NULL

GO

Not only is the query execution time significantly reduced, but also the space required to store the index is reduced to about 10% in this case. This space savings could be used to add additional frequently used fields as key or included fields into the index to speed queries even more. Note that in this case I’m simply including the CompletedOn field rather than adding it as a key since it’s implicitly keyed by the WHERE clause.

If you review the execution plan for the query, you’ll notice that it automatically reverts to the unfiltered index if you remove the CompletedOn filter from the WHERE clause. Thus, you could essentially have a separate set of indexes for current and archived data.

One final note. In my experience with filtered indexes, I’ve found that filtering on a BIT field doesn’t seem to actually filter. You end up with all the rows indexed as if there were no filter on it. Has anyone else found that to be the case?

Additional Info

Click here to download ZIP file with all SQL scripts

Filtered Index Design Guidelines – Microsoft TechNet (http://technet.microsoft.com/en-us/library/cc280372.aspx)

Article detailing issues with filtered indexes and SET options - Interesting issue with Filtered indexes

Thursday, February 3, 2011

Filtered Indexes Part 2: More With Unique Constraints

Imagine the following table:

CREATE TABLE tblMyTable(

MyTableId int IDENTITY(1,1) NOT NULL,

UniqueCode nvarchar(10) NOT NULL,

IsActive bit NOT NULL DEFAULT 1

)

To enforce a unique constraint on the UniqueCode filed only when the IsActive field is true you would create the following index:

CREATE UNIQUE NONCLUSTERED INDEX IX_tblMyTable_UniqueCode

ON tblMyTable (

UniqueCode ASC

)

WHERE IsActive = 1

In this case the WHERE causing the index to only include those UniqueCode values where the IsActive field is 1 and thus only checking for uniqueness on active records.

Tuesday, February 1, 2011

Filtered Indexes Part 1: Nullable Columns

I’ve toyed with some potential uses of this, such as keeping archived data in its original table (an entry for another day). However, recently a more immediate use came up for it. Solving the age old problem of placing a unique constraint on a nullable field.

An index treats a NULL like any other value and so a unique index on a nullable field only allows a single record with a NULL value. In prior versions of SQL Server you would need to create triggers to enforce a unique constraint. However, in SQL 2008, by filtering the index to exclude NULLs, you can create a unique constraint on nullable.

Imagine the following table:

CREATE TABLE tblMyTable(If we want to create a unique constraint on UniqueCode but retain the ability to have nulls you’d create an index as follows:

MyTableId int IDENTITY(1,1) NOT NULL

,UniqueCode nvarchar(10) NULL

)

CREATE UNIQUE NONCLUSTERED INDEX IX_tblMyTable_UniqueCodeBy using the WHERE clause in the CREATE INDEX script you are removing NULLs from the index and thus ensuring that only non-null values are unique.

ON tblMyTable (

UniqueCode ASC

)

WHERE UniqueCode IS NOT NULL